Engage your learners!

Transcript of a paper presented at eLearnExpo 2004: Paris, Moscow

..that involve, amuse, challenge, develop and measure your learners through guided, interactive task simulations.

1. The problem with traditional eLearning

Many people also confuse ‘rich’ eLearning content with ‘interactive’ content, believing that by adding animated illustrations, 3D models, audio or video clips they are creating a more compelling (and therefore more engaging) experience. This is nonsense, often expensive nonsense. Unless a learner is obliged to get involved and actually make decisions, even if it results in failure, he/she will not learn how to achieve an objective in the most appropriate way.

eLearning doesn’t have to be like this.

And then we get a job. We all still need knowledge (of products, processes or procedures) but now we face a very different challenge ~ to bring together that knowledge with skill and judgement to competently perform a commercial task.

You don’t pay your staff to remember that 2as=v2-u2 or that your company was founded in 1823 by Elias Kronsburg. You pay them to sell a new pension every week or to replace the fuel pump on a diesel generator in less than 30 minutes. These are the tasks on which enterprises succeed or fail and our eLearning programs should be designed to equip your staff with the basic approach and skills to start tackling them with confidence.

We all still need knowledge… but now we face a very different challenge

to bring together that knowledge with skill and judgement

2. Secrets of creating engaging eLearning

As a set of objectives for your own programs, what if you could deliver eLearning that:

- teaches by doing not by watching?

- challenges, yet gives support when needed?

- suits all levels of ability and prior knowledge?

- helps develop skills/competence and also measures and records them?

- is so much fun to use that learners talk about it with colleagues and come back for more/to do better?

From the moment your learners start up the program, they should feel it is really high quality, something special produced just for them. They should be welcomed in and invited to explore and to experiment.

If the learners are typically a ‘games generation’ then give them a game!

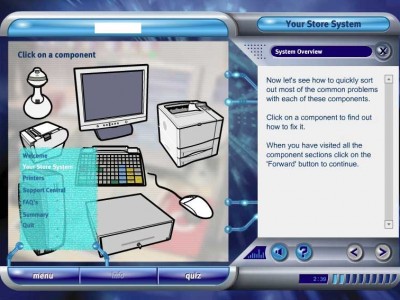

(Click the image for more detail)

Step 2 – intrigue them to make it memorable!

The program doesn’t have to offer buttons that look like buttons.

This is a main menu interface design for a corporate induction program. Rolling the cursor over an item reveals the relevant topic menu.

This fault finding exercise gives the learner a 360 degree ‘world’ in which to explore a vehicle’s engine area to select, test and replace components.

The technology is ‘QuickTimeVR’ ~ quick and costeffective to produce using a digital still camera fitted with a special 360 degree lens.

Visit panoramic-imaging for details of QuickTimeVR hardware and software.

(Click the images for more detail)

3. Putting it all together

We started this discussion by identifying the challenge: to produce a compelling eLearning program that can be as helpful as necessary to a novice whilst allowing an expert to whistle through it unaided. Why make everyone wade through screen after screen, whether or not they need it?

In fact, a strong argument could be made for testing learners before they work through any content, not after! A program designed so that the unique results of each learner’s pre-assessment automatically selects only those topics they need to study would deliver a perfect fit for each individual. Some people might spend ten minutes in a module, others two hours. A ‘self-configuring’ training program . . . why not?

The novel approach proposed is that learners are given a job-related task to complete, almost as soon as they start the training program. They are told what the objective is and given a range of information and tools with which they should be able to achieve it.

If they get stuck on a problem, they do what they would do in the workplace: ask for advice. How you deliver that advice is up to you . . . it could be a ‘Hints’ page, a brief tutorial on some under-pinning knowledge or perhaps a ‘virtual interview’ where they select a question from a list and an expert gives a pre-recorded audio or video explanation.

The measurement of ‘competence’ in this type of eLearning can be in highly practical terms such as ‘correctly proposing the most appropriate insurance cover in 80% of customer calls handled’.

Perhaps the learner can ‘interview’ the callers by selecting from a bank of questions and listening to pre-recorded responses. Asking the caller’s age a second time might generate the response: ‘But . . . I just told you’.

Proposing an inappropriate policy could get an angry ‘Why would I need income protection? I’m 78!’ . . . and the phone is slammed down.

How much more insight this type of assessment gives than 80% scored in a multichoice quiz. In a conventional quiz, each question stands alone ~ an isolated test of recalling a fact. In ‘task based’ assessments the learner must bring together all of the knowledge that would have been tested in a normal quiz plus the use of judgement.

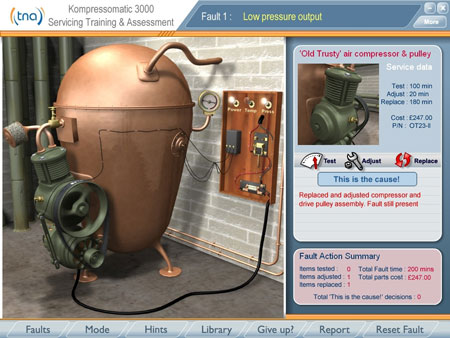

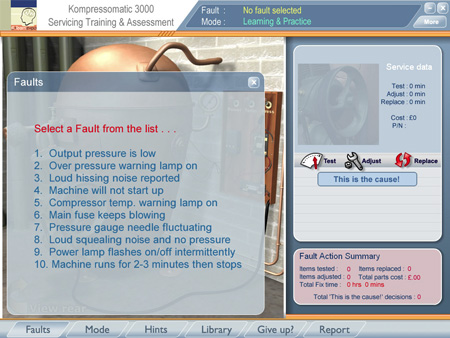

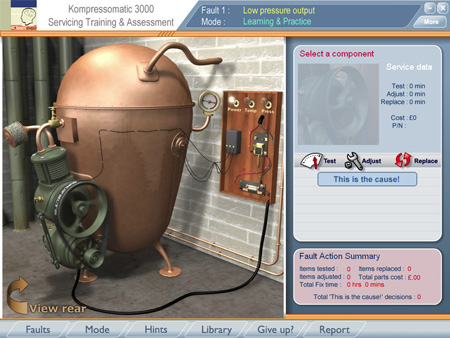

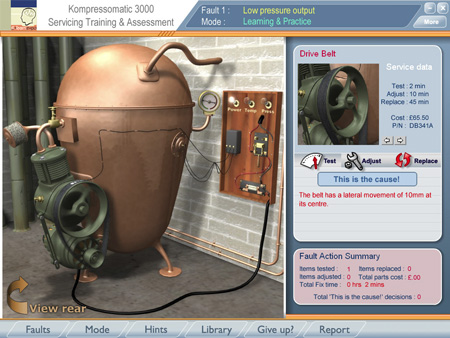

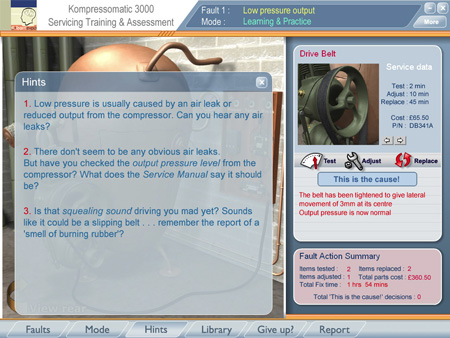

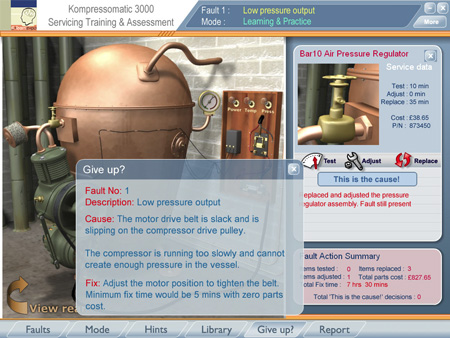

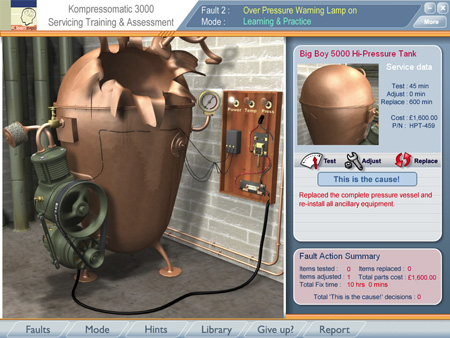

Introducing the Kompressomatic 3000

As an example of this ‘task based’ approach in action, a fully operational training and assessment simulation was produced by TNA Ltd on the well known Kompressomatic 3000 high pressure industrial air system. This working model has been demonstrated at eLearnExpo and other international eLearning conferences in 2004.

- electric motor

- compressor, drive pulley and drive belt

- pressure regulator

- hand-beaten copper pressure vessel

- pressure gauge

- over-pressure & over-temperature sensors and warning lamps

- control unit

- fuse

- on/off switch

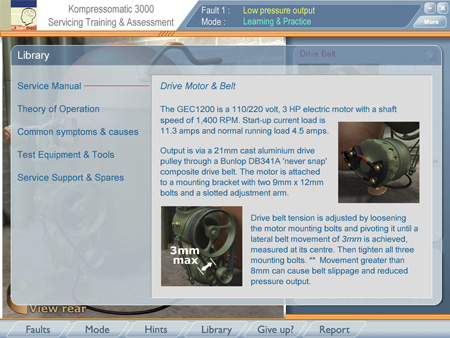

Each component may be selected and then tested, adjusted and replaced. Every action carries with it a cost, both in actual job time and in parts. When the learner takes an action, they receive feedback according to the nature of the fault.

The data for each fault can be stored in separate text files or in a faults database.

We can see the belt flapping back and forth on the drive pulley as it rotates.

The pressure gauge is reading about 1/3 full scale and the operator reports recent low pressure output.

They choose ‘Test’ and the program reports that the belt has 10mm of lateral movement. Two minutes are added to the total job time, the time it would have taken to stop the machine and measure the belt deflection.

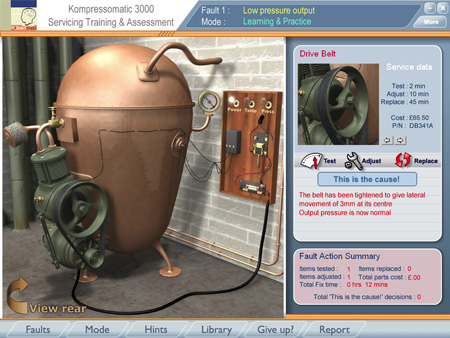

Ten minutes are immediately added to the job time and the program reports that output pressure is now normal.

They switch the machine on and now the motor runs smoothly, the belt is tight, the squealing has stopped and the pressure gauge is reading almost maximum output.

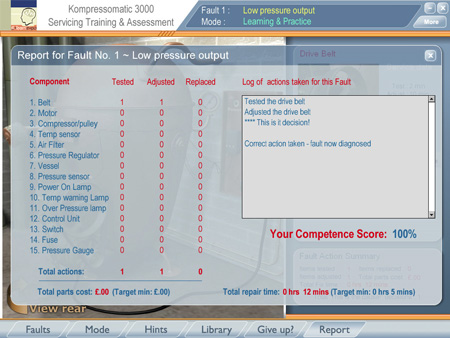

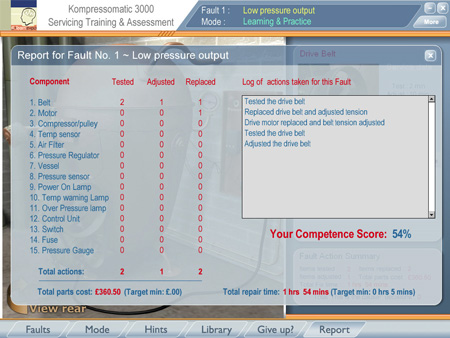

The ‘Report’ button displays an analysis of every action they took with a summary of their own performance compared with a target ‘best possible’.

In this case, they opted to test the belt before adjusting it but still managed to score 100% competence.

He/she managed to not only replace the drive belt but also the motor too, incurring a total parts cost of £360.50 and a job time of almost two hours.

The program deducted points for all actions taken and any time or cost incurred beyond the target to arrive at a score of 54%. Where you set the actual competence ‘pass’ level is up to you.

Each time they open this panel a new Hint is given ~ just like asking an expert (but infinitely patient) colleague for guidance. Each request for a Hint knocks another 5 points off their score.

The theory of operation of each component and the correct procedure for testing, adjustment and replacement are all described in these screens.

Interested in Boyles’ Law? It’s all explained here in practical terms. Need to explore and learn about what can go wrong with the Kompressomatic 3000 and why? Just click on a symptom and an expert explains all you need to know and be able to do.

The program can run in two Modes: ‘Learning and Practice’ or ‘Assessment’. In ‘Assessment’ Mode, no Hints or Library access is allowed. Whether or not a learner is measured in any way, or their actions reported in the way we have shown, is up to you.

Direct links could be added from this panel to relevant revision screens.

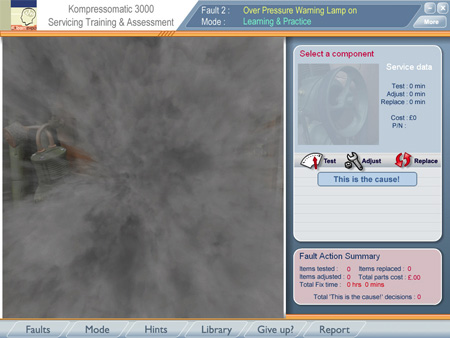

How about Fault No.2 ~ the operator reports the over-pressure warning lamp started flashing and they immediately switched the system off.

The novice (unaware of the important warning given in the section on fault symptoms) switches the machine on and a few seconds later the pressure vessel explodes.

(And yes, the model does explode with full dramatic sound effects and gushing vapour.)

If they do replace the vessel and try again, it will just blow again – another £1,600. But if they thought before switching on, maybe do a bit of research, perhaps test the pressure regulator, they might discover the correct, safe way to tackle the fault would take only 35 minutes and would cost under £40.

In conclusion

This interactive example could be enhanced and improved in many ways. It took about 15 days to create, including the wonderful working 3D models with their echoes of Wallace and Grommit.

The model was not intended to be a definitive program but an illustration of how you might combine guided learning with feedback, best practice advice, safe experimentation and an assessment of competence in completing a job-related task.

This type of program serves several roles . . .

- as an enjoyable, engaging, guided voyage of discovery for learners

- as an opportunity for those learners to prove that they can use their judgement to

- apply the skills and knowledge they have acquired in a competent manner

- and as a highly revealing assessment for job applicants

Other suitable topics

There is no reason why the same techniques cannot be applied successfully to other eLearning topics as well as to equipment servicing, for example…

- Virtual audio/video ‘interviews’ with prospects – the phone rings, they answer it

- Probing caller for needs/facts/worries/budget/authority etc, from a bank of questions

- Able to select/configure/propose the most appropriate product solution

- Expert sales people may be interviewed on techniques/approach/advice

- Product background material, competitive data, etc, available as needed

- Expert feedback after each call gives ‘so what should you have done?’ guidance

- Virtual company with interactive departments, created in QuickTimeVR

- ‘Hot spots’ on people lets new employees interview them from a bank of questions

- Able to move between departments (each room has ‘hot spots’ to next room)

- Products/services can be explored by visiting the ‘cinema’ – choose a video briefing

- Visit their own department to explore the job they will be doing – ask experienced staff what it’s like, how they overcome specific problems, etc. All questions chosen from a large bank. All responses pre-recorded, audio is more effective and far cheaper to produce than video.

- Practise handling a variety of callers . . . angry, obscure, evasive, etc

- Response/reaction interactions can be planned to be very realistic – poor decisions lead to anger, frustration and a terminated call. Use actors, not your own staff.

- All actions taken are logged and can be replayed for review with a supervisor, or side by side with a ‘best practice’ example of how that call should have been handled

- Assessment can be measured based on factors such as how many ‘positive’ or ‘complementary’ caller responses were uttered during the call, how many times the same question was asked, how long the call lasted, etc.

For each call, a ‘model’ response would have been built which defines the ideal responses/questions, the outcome (did they offer the caller a £100 cash refund when they should have offered to replace the product?) and the call length.

Penalties are then applied by the program for actions which deviate from the ‘model’ performance.

- Record an application being used correctly to carry out a task – let learners view these ‘videos’ as ‘Show Me’ examples

- Create simulated application ‘Try it’ sequences where the learner has to choose the correct options/buttons and enter the correct text. Incorrect actions receive feedback, on the third incorrect attempt at an action the program shows them what to do.

- Every action can be logged and reported against a model of the ‘ideal’ performance.

Reaching competence might be defined as ‘creating a new customer account record within 3 minutes, using no more than 15 mouse or keyboard actions’.

Penalties could then be applied to their score by subtracting (say) 10 points for each additional minute spent, 5 points for each additional mouse/keyboard action.